A customer support hire rarely fails because the person “didn’t care.” Support hires fail because leaders interview for likability while the job demands judgment under pressure. Customers now treat speed and clarity as table stakes, and they switch brands quickly when service disappoints. Zendesk reports that more than 50% of consumers will switch to a competitor after one bad experience. When churn rate moves because of one mishandled interaction, your hiring process becomes a revenue decision, not an admin task.

Many teams respond by outsourcing to a VA or a small BPO partner, expecting quick relief. Outsourcing can absolutely work, but the hiring bar must rise, not fall. Customer Experience (CX) work forces your agent to interpret policy, manage emotion, and protect margins in the same conversation. The best interview therefore tests empathy and operational discipline together. This interview scorecard gives you 25 customer support VA interview questions with specific red flags, so you can hire for execution instead of vibes.

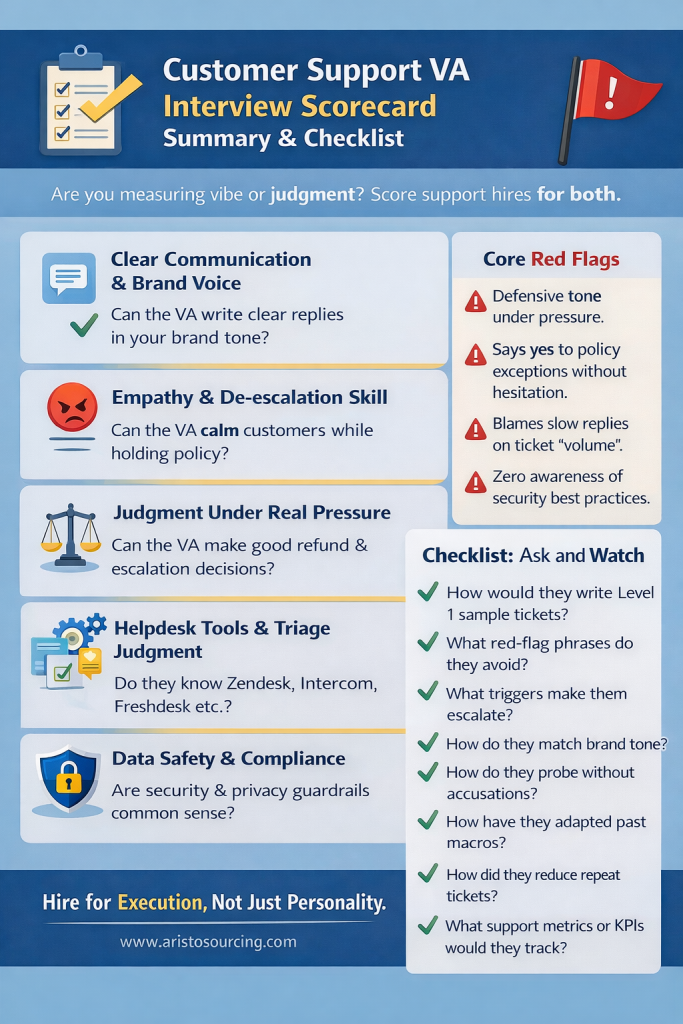

Category summary table

| Category | Key competency | Why it matters |

|---|---|---|

| A. Communication and tone | clear writing, brand voice | prevents robotic replies and confusion |

| B. De-escalation | empathy, conflict handling | reduces refunds, chargebacks, bad reviews |

| C. Judgment and policy | escalation discipline, guardrails | protects margin and prevents policy drift |

| D. Tools and workflow | triage, macros, tagging taxonomy | improves FRT, consistency, and reporting |

| E. Remote execution | ownership, async updates | keeps work moving across time zones |

| F. Security and compliance | least privilege, consent rules | protects customer data and brand risk |

Why structured interviews beat “gut feel” for support roles

Support leaders often run conversational interviews because they want the hire to feel “human.” That instinct makes sense, but the format still needs structure. Research consistently shows that structured interviews predict job performance better than unstructured conversations. Several practitioner summaries of the research also note that structured interviews can come close to twice the predictive power of unstructured interviews, largely because they reduce noise and force job-relevant evidence.

Customer support work amplifies the value of structure because the role involves repeated decision patterns. An agent must decide when to ask for proof, when to escalate, when to offer credit, and how to phrase a refusal without triggering more anger. Those decisions look small, but they compound into churn rate and trust. You cannot reliably evaluate that judgment with a free-flowing chat. You can evaluate it with scenario questions and consistent scoring.

How to use this scorecard (simple and disciplined)

Run a structured interview with the same questions in the same order for every candidate. Score each answer from 1 to 5, and write one line of evidence that justifies your score. A “5” includes a specific example, a decision process, and a measurable outcome. A “3” sounds plausible but lacks proof, detail, or clear reasoning. A “1” shows defensiveness, blame, policy-breaking instincts, or vague answers that would collapse under real ticket pressure.

After the interview, run a short work sample as the final exam. The work sample should feel small enough to respect the candidate and real enough to predict performance. Keep it to five short tickets and a 30-minute time box, and pay for it if you want to signal seriousness. The candidate’s writing will reveal more than their talk, because support lives in writing across email and chat.

The “final exam” work sample (five tickets, one policy page)

Use five realistic tickets that force judgment, not just typing. Include one angry customer, one refund request outside the window, one address change request, one suspected fraud signal, and one technical question with missing information. Give the candidate a one-page tone guide and a short policy snippet, then ask for full replies that include next steps and a timeline. If you want a non-competitive template reference to build scenarios, Help Scout publishes response templates and examples that you can adapt.

This work sample resolves the core hiring debate. Some candidates charm in conversation but write poorly under time pressure. Other candidates write with clarity and judgment but show less sparkle on calls. Support performance follows writing and decisions more than it follows charisma, so you should weight the test heavily.

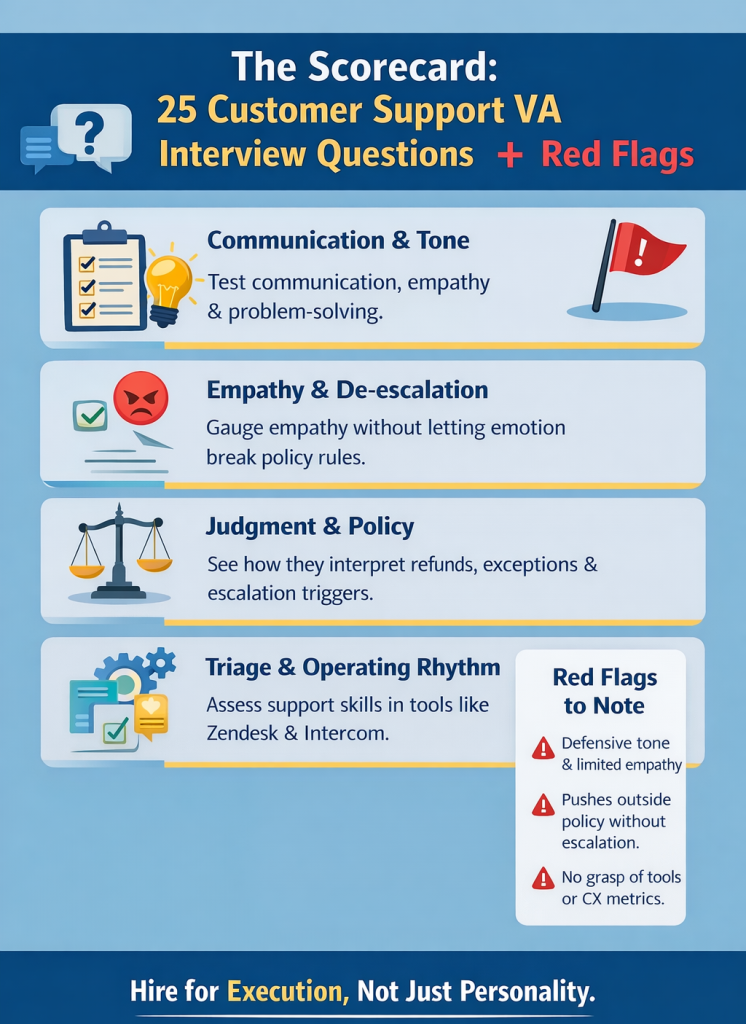

The scorecard: 25 customer support VA interview questions and red flags

A) Communication and tone of voice (Questions 1–5)

- “This is the third time I’m asking. Do you even read messages?” How do you reply?

Red flags: defensiveness, long explanations, blaming the customer, no ownership, no next step. - How do you keep replies clear when the issue is complex? Give a real example.

Red flags: walls of text, no structure, no summary line, no steps. - Tell me about a time you had to say “no” and still protect the relationship.

Red flags: cold policy lecture, or giving in without guardrails. - How do you learn a brand voice quickly? What do you do in week one?

Red flags: “I just copy others,” no mention of tone guide, examples, or calibration. - Rewrite this to sound human: “Your request has been received and will be processed accordingly.”

Red flags: cannot rewrite, keeps robotic phrasing, removes empathy.

B) Empathy and de-escalation (Questions 6–10)

- Describe your de-escalation process in three steps, then give an example.

Red flags: no process, vague “I calm them down,” escalates too late. - What do you do when a customer insults you personally?

Red flags: argues, mirrors tone, threatens, or escalates every time. - Tell me about a moment you changed your mind after new information arrived.

Red flags: ego defense, refusal to admit error, no learning signal. - How do you handle customers who demand an exception to policy?

Red flags: reflexive yes, or reflexive no, without tradeoffs and escalation triggers. - Which phrases inflame tension, and what do you use instead?

Red flags: cannot name any, uses accusatory language, uses “that’s not my job.”

C) Judgment, policy, and escalation (Questions 11–15)

- When should you escalate even if you can solve the ticket?

Red flags: never escalates, or escalates only when stuck, ignores risk. - Tell me about a time you prevented a bad refund decision. What did you do?

Red flags: refunds to end the conversation, no margin awareness, no evidence mindset. - How do you ask for proof (photo, order details) without sounding accusatory?

Red flags: accusatory tone, “prove it,” or no mention of respectful framing. - If two customers report the same issue, what do you do besides replying?

Red flags: treats tickets as isolated, no pattern detection, no feedback loop. - How do you respond when the customer demands a faster resolution than your SLA allows?

Red flags: overpromises, gives fake timelines, or pushes the customer away.

D) Tools, triage, and operating rhythm (Questions 16–20)

- Which helpdesk tools have you used, and what workflows did you improve?

Red flags: names tools but cannot describe views, triggers, macros, tagging, or routing. - How do you reduce first response time without lowering quality?

Red flags: “reply faster,” no mention of triage, templates, or prioritization. - Explain your tagging taxonomy. How do tags support routing and reporting?

Red flags: ignores taxonomy, cannot explain priorities, cannot explain reporting. - Which metrics do you track weekly, and what actions do you take when they slip?

Red flags: tracks nothing, mentions CSAT only, or blames volume without changes.

Support teams typically track metrics like first response time (FRT) and other help desk metrics to evaluate performance and improve outcomes. - Describe a weekly CX summary you would send to a manager. What would it include?

Red flags: reports ticket count only, no drivers, no insights, no prevention suggestions.

E) Remote execution and ownership (Questions 21–23)

- Tell me about a time you worked asynchronously and still kept work moving.

Red flags: needs constant supervision, delays without proactive updates. - How do you handle handovers across time zones? Share your format.

Red flags: “I just message them,” no structure, no ticket links, no deadlines. - Describe a support mistake you made and the system change you implemented.

Red flags: “I never make mistakes,” blames others, no prevention mindset.

F) Security and compliance (Questions 24–25)

- How do you protect customer data in daily work? Be specific.

Red flags: shares passwords, uses personal accounts, screenshots sensitive data casually. - If you run abandoned cart follow-ups or phone support, how do you stay compliant with consent rules?

Red flags: ignores consent, treats outreach as “just messaging,” cannot describe guardrails.

Red flags that predict churn, not just bad performance

Some red flags look small during an interview, but they show up later as churn rate and reputational damage. Defensiveness drives escalation because customers sense disrespect immediately. Vagueness causes repeat contacts because the agent never sets clear next steps. Policy drift destroys margin because the agent trains customers to ask for exceptions. A lack of taxonomy and triage creates slow first response time because tickets pile up without a routing system. If your business competes on experience, you cannot afford those failure modes.

You should also avoid a common hiring bias: leaders often equate friendliness with competence. Friendly candidates can still break policy and create costly precedents. You should reward candidates who demonstrate calm empathy while still holding boundaries. That combination reduces the odds that a single bad interaction sends a customer to a competitor, which Zendesk data suggests happens more often than leaders expect.

The best solution: scorecard and system and matching process

This interview scorecard will raise hiring quality, but it will not replace onboarding. Your onboarding should mirror the scorecard categories, so the hire grows inside a clear system. You should give the agent a tone guide, an escalation matrix, a macro library, and a tagging taxonomy during the first week. You should also schedule weekly calibration so you keep voice and policy consistent as edge cases appear. That operating rhythm matters because customer expectations for responsiveness stay high, and teams must actively manage response time metrics like FRT.

When you hire support through Aristo Sourcing, you should still run this scorecard with every finalist. You will make the final choice with evidence, not hope. You will also build a support function that feels like a system, not a person. That approach turns outsourcing from a cost tactic into a CX strategy that protects retention.